The most expensive bugs I’ve seen in large organizations did not come from exotic edge cases. They came from something far more boring: a test run that “passed” on an unstable environment, only to blow up in production. Industry estimates show that a defect that might cost around $100 to fix early in the lifecycle can turn into a $10,000 problem if it slips into production. In other words, test environment chaos is not a nuisance. It is a profit leak.

In many teams, test environment management still sits at the bottom of the priority list. Yet it is one of the quiet foundations of QA stability and credible enterprise testing which is why enterprises increasingly rely on modern quality engineering services to bring discipline, repeatability, and reliability into their QA environments.

Why do test environments fail so often?

Most organizations don’t set out to have chaotic environments. Chaos emerges because environments evolve faster than documentation, ownership, and funding models around them.

Common failure modes include:

· Shared environments with no clear booking rules

· “Snowflake” setups created manually and never reproducible

· Partial or stale test data that no longer represents real usage

· Environment changes applied directly on servers instead of through scripts

· No metrics for environment uptime or flakiness, only anecdotal complaints

Research on test environment stability highlights that unstable environments reduce the reliability of test results and correlate with more production defects and higher operational costs. When you treat environments as disposable “stages” instead of long-lived assets, you naturally underinvest in design, monitoring and lifecycle.

The result is a subtle but very real tax:

· Testers spend hours re-running tests to confirm whether a failure is real

· Developers stop trusting QA feedback because “it works on my machine”

· Defect leakage metrics look worse, even when test coverage is good

This is exactly where deliberate test environment management becomes less of an operational chore and more of a risk-control function.

The real challenge: dependency blast radius

Test environments rarely fail in isolation. They fail at the seams between systems.

In modern enterprise testing, a single end-to-end flow can cut across:

· Dozens of microservices

· A legacy mainframe or monolith

· Third-party payment, KYC or messaging providers

· Feature flags, config services and identity providers

· Data pipelines that precompute recommendations, pricing or fraud signals

You often have two conflicting pressures:

1. Product teams need realistic dependencies to catch integration defects.

2. Platform teams need control to avoid every team hard coding against live systems.

That creates a “dependency blast radius” problem. A small change in a shared component (schema tweak, TLS setting, API rate limit) ripples across multiple environments and test suites. The environment appears flaky, when in reality you have ungoverned dependency contracts.

A more mature approach to test environment management treats dependencies as first-class design elements:

· Define explicit “environment contracts” for each dependency

· Version those contracts, just like code APIs

· Provide certified stubs or sandboxes for high-risk external services

· Track which environments use which contract versions

This shifts the question from “Why is QA so unstable?” to “Which contract changed, and who owns that decision?”

Table: Is it a test or the environment?

| Symptom | Likely Root Cause | First Place to Look |

| Test fails only at certain times of day | Competing jobs, batch processes, shared infra | Environment resource calendar, job scheduler |

| Test passes locally but fails in shared QA | Config drift, missing feature flag | Config repo, environment diff vs dev |

| Random timeouts across multiple suites | Network or dependency instability | Dependency health checks, service SLOs |

| Same test flaky across all teams | Core platform or data issue | Shared services, pipeline runs |

A simple table like this, used in triage, avoids the default instinct to blame the testers.

Building stable and reusable setups

Most teams say “we have automation,” but mean only test automation. High-performing teams automate the environment lifecycle itself.

A reusable environment pattern usually has four ingredients:

1. Blueprints, not tickets

Every environment type (E2E, performance, UAT, exploratory) gets a blueprint describing:

a. Purpose

b. Topology

c. Allowed data sets

d. Expected lifetime and concurrency

This is living documentation, not a static Confluence page.

2. Infrastructure as Code as the only entry point

No manual edits on servers. Provisioning, configuration, and network rules flow through IaC and configuration management tools. That allows you to:

a. Rebuild an environment from scratch

b. Run environment “diffs” when a defect appears

c. Embed security approvals into the provisioning process

3. Test data as a product

Test data isn’t just a database dump. You need curated, masked, labeled data sets for specific classes of tests:

a. Functional flows

b. Edge cases and negative scenarios

c. Performance and volume tests

This data should be versioned and refreshed with the same discipline as code.

4. Environment fitness checks

Before any test suite runs, an automated checklist asserts that the environment is “fit to test”:

a. Key services up and healthy

b. Critical background jobs running

c. Expected data slices present

d. Feature flags at known values

These practices look simply, but once you apply them consistently, test environment management stops being reactive in firefighting and starts looking like an engineering practice.

Tooling that helps, not distracts

Tooling for environments is a crowded space. Rather than chasing shiny new dashboards, I tend to frame the stack around four questions.

Can we create and retire environments safely?

Here, infrastructure as code tools like Terraform or CloudFormation, plus pipeline engines (GitHub Actions, GitLab CI, Jenkins, Azure DevOps) are the backbone. They provide:

· Repeatable provisioning of entire stacks

· Policy checks before changes go live

· Audit trails of who changed what and when

Can we see the environment’s health at a glance?

You need observability for environments just as much as for production:

· Logs and metrics per environment, not just per service

· Dashboards that clearly separate “test failures” from “environment failures”

· Flaky test tracking where environment-based failures can be tagged and trend.

When you start correlating environment incidents with defect leakage or re-run rates, you can finally quantify how poor test environment management is hurting delivery.

Can we manage bookings and conflicts?

In large enterprise testing programs, shared environments are unavoidable. Basic calendars are not enough. Teams benefit from:

· Environment scheduling tools or plugins (for example, integrated with Jira)

· Reservation workflows tied to releases and test cycles

· Simple rules, such as “no overlapping performance and functional runs”

Can we isolate or simulate risky dependencies?

For external services and high-cost components:

· Use contract testing frameworks to pin expected behavior

· Provide emulators or sandboxes with known behavior and failure modes

· Maintain a registry of which test suites can run against mocks vs live services

The goal is not to buy every tool in the market. It is to choose a small stack that gives you control where it matters for QA stability.

Scaling stability across teams

Stability is not a QA-only responsibility. It is an organizational habit.

To bring order to test environment chaos at scale, I usually recommend three structural moves.

Create a small environment platform team

Not a “ticket factory,” but a product team with a clear charter:

· Provide standard environment blueprints and automation

· Maintain central observability and booking tools

· Set guardrails and minimal contracts for dependencies

They partner with domain teams, but they own the horizontal practice of test environment management.

Define SLOs for environments, not just for apps

If you already track SLOs for production, introduce similar targets for environments:

· Minimum availability during planned test windows

· Maximum allowed flaky rate attributed to environment issues

· Time to restore a broken environment to a “fit to test” state

When environment SLOs are visible beside release metrics and defect leakage, discussions around QA stability stop being emotional and start being data driven.

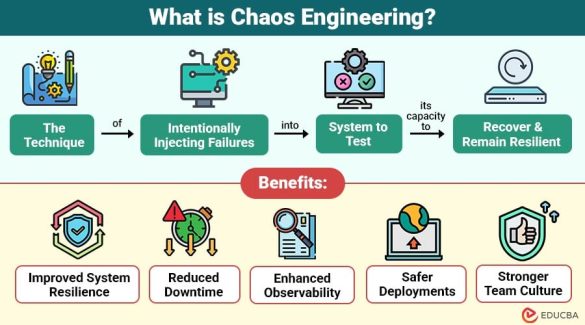

Practice “environment chaos days” safely

Taking a cue from chaos engineering, some teams run controlled experiments in non-critical environments:

· Intentionally degrade a dependency (add latency, reduce capacity)

· Break a configuration or rotate a certificate earlier than planned

· Simulate data corruption for a small slice of synthetic users

The goal is to validate not just resilience of services, but also the human and process response:

· Did the right dashboards light up

· Did testers spot the pattern and categorize it as an environment issue

· Did runbooks help restore normal operation

Done a few times a year; this kind of drill exposes brittle spots in your enterprise testing architecture, well before a production release.

Closing thoughts

Environment chaos is easy to ignore because it hides behind flaky tests, reruns and “temporary issues.” Yet the financial impact is well documented. Later defects are dramatically more expensive, and unstable test environments are one of the quiet reasons they slip through.

If you treat test environment management as:

· A designed system with contracts and blueprints

· A product with users, SLOs and roadmaps

· A shared responsibility across QA, dev and platform teams

You stop firefighting and start building predictable delivery. The payoff is simple: fewer nasty surprises after go-live, more credible test results and a QA function that is trusted not just to find bugs, but to tell the truth about release readiness.